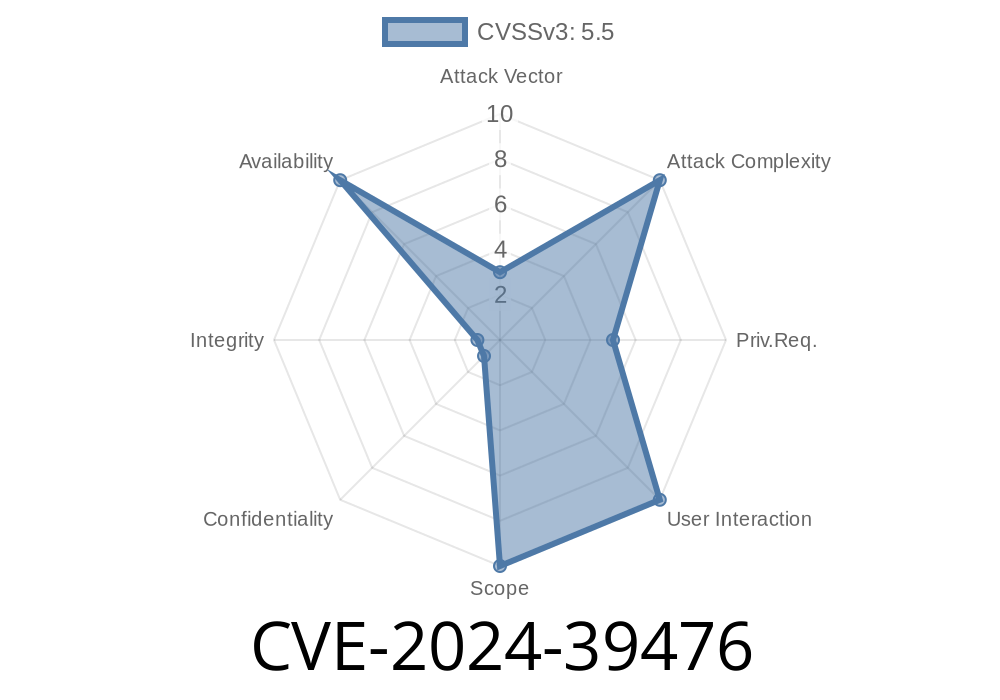

In early 2024, a subtle yet critical bug was discovered and patched in the Linux kernel's RAID5 implementation. The issue, now tracked as CVE-2024-39476, could silently cause systems running RAID5 arrays to deadlock in certain circumstances—a nightmare for reliability and uptime. Let's break down what happened, why it was dangerous, and how the underlying flaw could have been leveraged in real-world environments.

Table of Contents

1. [Background: Linux MD/RAID and raid5d()](#background)

[References and Further Reading](#references)

## 1. Background: Linux MD/RAID and raid5d()

RAID (Redundant Array of Independent Disks) is commonly managed on Linux using the md (Multiple Device) subsystem. raid5d() is the core daemon thread managing RAID5 arrays.

When you run commands like mdadm --create or lvconvert --type raid5, the kernel spawns this daemon to handle recovery, IO, and coordination between drives.

The vulnerability arises from how the RAID5 daemon (raid5d) interacts with two internal mechanisms

- MD_SB_CHANGE_PENDING: A flag indicating pending changes to the RAID's superblock (the kernel's metadata for RAID status).

md_check_recovery() needs to hold the reconfig_mutex to clear MD_SB_CHANGE_PENDING.

- However, while the flag is set, raid5d() enters a tight loop, trying (and failing) to issue IO, waiting indefinitely for the flag to clear.

- If another process holds the mutex, raid5d gets stuck—wasting CPU, stalling IO, and causing the system to hang or degrade.

The core logic (simplified)

while (MD_SB_CHANGE_PENDING) {

md_check_recovery(mddev);

// Can't make progress, but don't break out either...

issue_pending_io(mddev); // Blocks until flag clears

}

If the thread owns the mutex needed to clear the flag, it can proceed. But if it's blocked, it just spins, never making progress.

Trigger Scenario

The bug was reproducible with LVM2 tests, particularly lvconvert-raid-takeover.sh. A heavy IO/metadata update followed by a conversion triggered the problem.

Attackers or rogue processes could theoretically exploit this by holding the reconfig_mutex (e.g., by initiating a long-running RAID reconfiguration), preventing superblock updates, and stalling IO across the array—resulting in a form of denial of service (DoS).

Here's what the faulty logic looked like before the patch (pseudocode)

void raid5d(struct mddev *mddev) {

acquire(reconfig_mutex);

while (has_pending_io(mddev)) {

if (MD_SB_CHANGE_PENDING) {

md_check_recovery(mddev);

// Can't progress if mutex is locked elsewhere, but keeps looping

}

issue_io(mddev); // This waits for MD_SB_CHANGE_PENDING to clear!

}

release(reconfig_mutex);

}

The deadlock happens because issue_io() itself cannot proceed, as it needs the very flag that only md_check_recovery() can clear, but only if it gets the mutex.

Privilege Required: Root or equivalent privileges (access to RAID management tools) are needed.

- Impact: Denial of service. By scripting a long-hold of the mutex (e.g., via a suspended reconfiguration), all IO to the RAID device can be blocked for an indefinite period.

Persistence: The array may require a reboot to clear.

- Data Risk: Data loss is unlikely, but system stalling can disrupt uptime, monitoring, and application reliability.

Exploit Example

An advanced attacker could write a tool to acquire the reconfig_mutex for an abnormal period using a kernel exploit or by abusing IOCTLs:

// Hypothetical: Holding RAID reconfig lock

md_ioctl(fd, RAID_START_RECONFIG, ...); // sudo/root privileges required

// suspend process here, causing IO on /dev/mdX to hang indefinitely

Note: This is illustrative; actual exploitability depends on local environment and kernel patch level.

5. Patch Walkthrough

The fix (commit 6fb63faad525) takes inspiration from RAID1/10 code:

Skip issuing IO if the MD_SB_CHANGE_PENDING flag is still set *after* an attempted recovery.

- The daemon thread is *not* stuck in a loop—it simply backs off and is reliably woken when the mutex is available and the flag is cleared.

Patched logic

void raid5d(struct mddev *mddev) {

if (MD_SB_CHANGE_PENDING) {

if (!try_acquire(reconfig_mutex)) {

// Don't issue IO, wait for notification

return;

}

md_check_recovery(mddev);

release(reconfig_mutex);

if (MD_SB_CHANGE_PENDING) {

// Still pending, so don't proceed

return;

}

}

issue_io(mddev); // Now safe

}

This fixes the deadlock, aligning raid5d() with best practices elsewhere in MD RAID code.

6. References and Further Reading

- Original Discussion: LKML post by Xiao Ni

Linux Kernel Patch:

CVE Detail:

LVM2 Test Reference:

- LVM2 test suite

- Linux MD/RAID Documentation:

- Kernel.org MD RAID documentation

TL;DR

- CVE-2024-39476 caused RAID5 arrays to hang if housekeeping could not proceed, due to tight coupled locks and flag checks.

Fix was to skip IO and let the daemon sleep until safe to proceed.

- While not trivially exploitable for remote code execution, this bug's ability to completely stall critical storage subsystems made it a priority fix.

Timeline

Published on: 07/05/2024 07:15:10 UTC

Last modified on: 08/02/2024 04:26:15 UTC